Discretized Streams (DStreams)

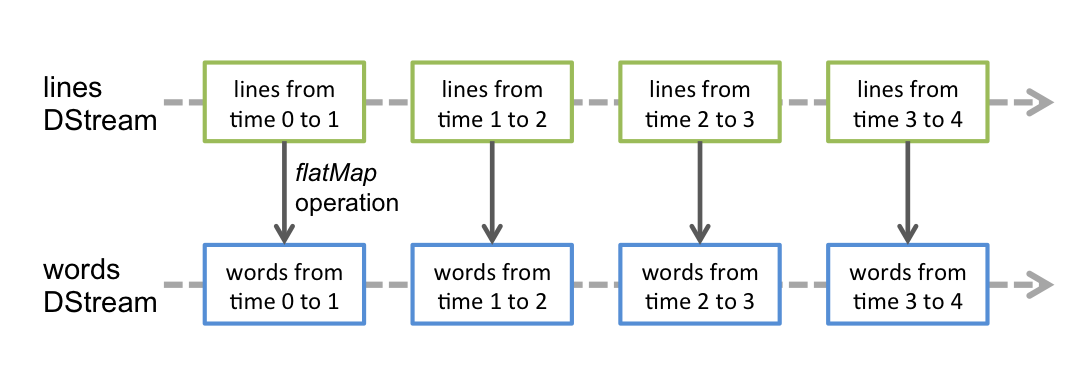

Discretized Stream or DStream is the basic abstraction provided by Spark Streaming. It represents a continuous stream of data, either the input data stream received from source, or the processed data stream generated by transforming the input stream. Internally, a DStream is represented by a continuous series of RDDs, which is Spark’s abstraction of an immutable, distributed dataset (see Spark Programming Guide for more details). Each RDD in a DStream contains data from a certain interval, as shown in the following figure.

Any operation applied on a DStream translates to operations on the

underlying RDDs. For example, in the earlier example

of converting a stream of lines to words, the flatMap operation is

applied on each RDD in the lines DStream to generate the RDDs of the

words DStream. This is shown in the following figure.

These underlying RDD transformations are computed by the Spark engine. The DStream operations hide most of these details and provide the developer with higher-level API for convenience. These operations are discussed in detail in later sections.

Input DStreams and Receivers

Input DStreams are DStreams representing the stream of input data

received from streaming sources. In the quick

example, lines was an input DStream as it

represented the stream of data received from the netcat server. Every

input DStream (except file stream, discussed later in this section) is

associated with a Receiver (Scala

doc,

Java doc)

object which receives the data from a source and stores it in Spark’s

memory for processing.

Spark Streaming provides two categories of built-in streaming sources.

- Basic sources: Sources directly available in the StreamingContext API. Example: file systems, socket connections, and Akka actors.

- Advanced sources: Sources like Kafka, Flume, Kinesis, Twitter, etc. are available through extra utility classes. These require linking against extra dependencies as discussed in the linking section.

We are going to discuss some of the sources present in each category later in this section.

Note that, if you want to receive multiple streams of data in parallel in your streaming application, you can create multiple input DStreams (discussed further in the Performance Tuning section). This will create multiple receivers which will simultaneously receive multiple data streams. But note that Spark worker/executor as a long-running task, hence it occupies one of the cores allocated to the Spark Streaming application. Hence, it is important to remember that Spark Streaming application needs to be allocated enough cores (or threads, if running locally) to process the received data, as well as, to run the receiver(s).

Points to remember

-

When running a Spark Streaming program locally, do not use “local” or “local[1]” as the master URL. Either of these means that only one thread will be used for running tasks locally. If you are using a input DStream based on a receiver (e.g. sockets, Kafka, Flume, etc.), then the single thread will be used to run the receiver, leaving no thread for processing the received data. Hence, when running locally, always use “local[n]” as the master URL where n > number of receivers to run (see Spark Properties for information on how to set the master).

-

Extending the logic to running on a cluster, the number of cores allocated to the Spark Streaming application must be more than the number of receivers. Otherwise the system will receive data, but not be able to process them.