Article Source

Latent Network Summarization: Bridging Network Embedding and Summarization

- Author: Di Jin, Ryan A. Rossi, Eunyee Koh, Sungchul Kim, Anup Rao, Danai Koutra.

- ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD), 2019.

Abstract

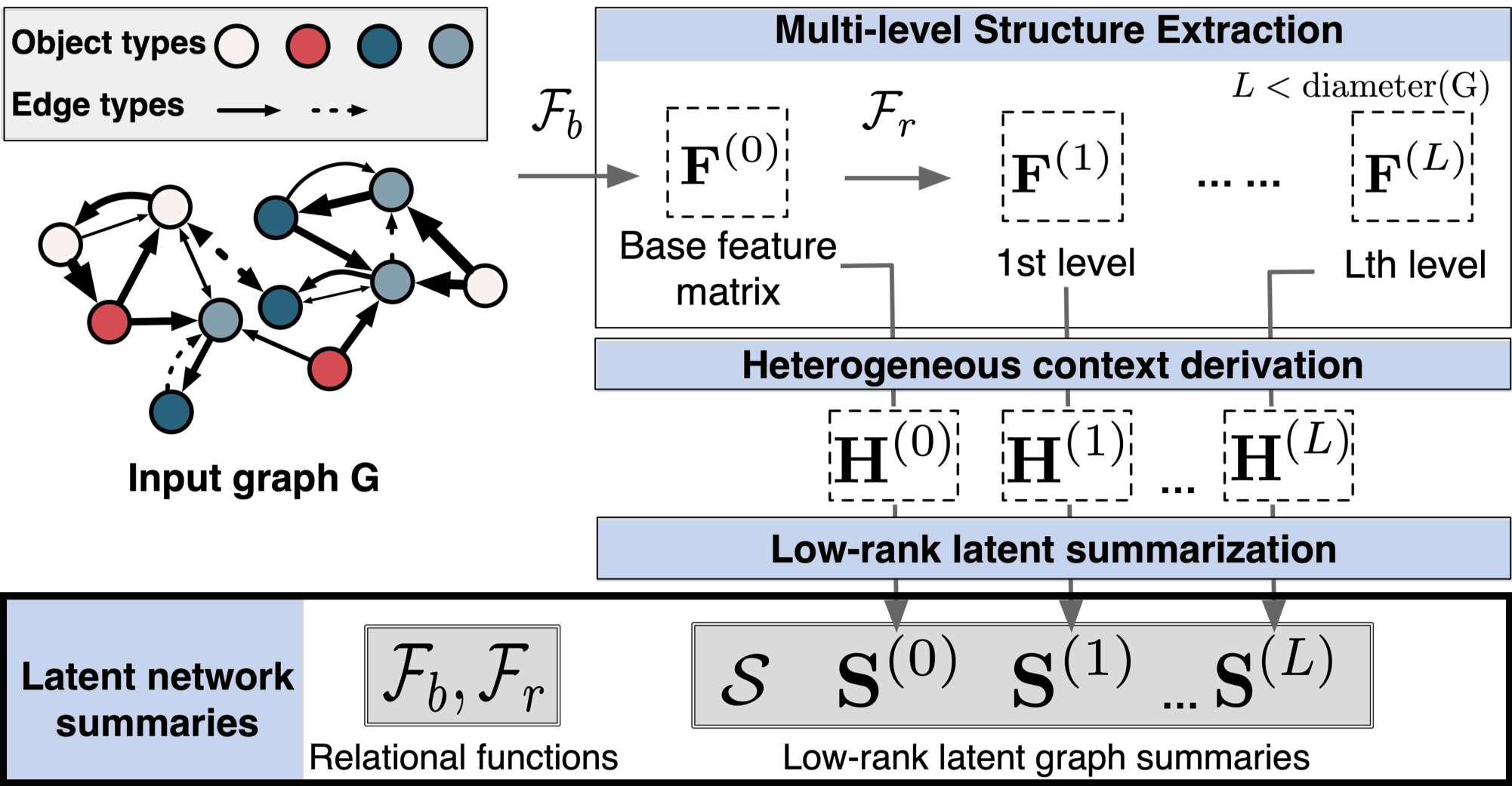

Motivated by the computational and storage challenges that dense embeddings pose, we introduce the problem of latent network summarization that aims to learn a compact, latent representation *of the graph structure with dimensionality that is independent of the input graph size (i.e., #nodes and #edges), while retaining the ability to derive node representations on the fly. We propose Multi-LENS, an *inductive multi-level latent network summarization approach that leverages a set of relational operators and relational functions (compositions of operators) to capture the structure of egonets and higher-order subgraphs, respectively. The structure is stored in low-rank, size-independent structural feature matrices, which along with the relational functions comprise our latent network summary. Multi-LENS is general and naturally supports both homogeneous and heterogeneous graphs with or without directionality, weights, attributes or labels. Extensive experiments on real graphs show 3.5 − 34.3% improvement in AUC for link prediction, while requiring 80 − 2152× less output storage space than baseline embedding methods on large datasets. As application areas, we show the effectiveness of Multi-LENS in detecting anomalies and events in the Enron email communication graph and Twitter co-mention graph.