Article Source

SageDB: A Self-Assembling Database System

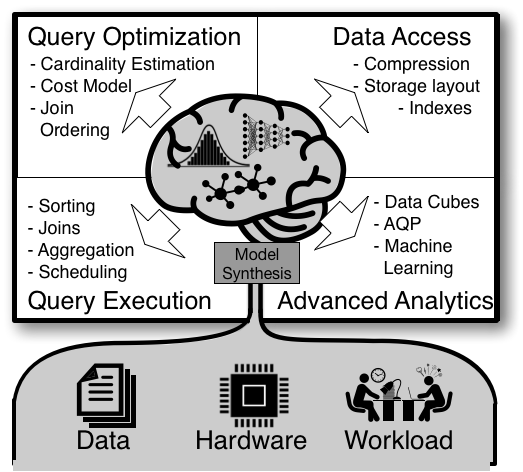

Our vision for research on ML for Systems is laid out in SageDB, a new type of data processing system that highly specializes to a particular application through code synthesis and machine learning.

We provide an overview of data systems components that we are currently working on, with more detailed project descriptions in the links, as well as a list of open-source repositories:

Data Access

Storage layout and index structures are the most important factors to guarantee efficient data access, and both are amenable to be enhanced by learned data and workload models. The original work on learned indexes showed that replacing traditional index structures with learned models can improve data access times while reducing memory footprint. Since then, we have worked to make learned indexes more practical, developed learned multi-dimensional indexes, created a benchmark for learned index structures, and applied learned indexes to DNA sequence search.

Publications:

- The Case for Learned Index Structures. SIGMOD 2018. Tim Kraska, Alex Beutel, Ed Chi, Jeffrey Dean, Neoklis Polyzotis

- SOSD: A Benchmark for Learned Indexes. NeurIPS Workshop on ML for Systems 2019. Andreas Kipf*, Ryan Marcus*, Alexander van Renen*, Mihail Stoian, Alfons Kemper, Tim Kraska, Thomas Neumann

- LISA: Towards Learned DNA Sequence Search. NeurIPS Workshop on Systems for ML 2019. Darryl Ho, Jialin Ding, Sanchit Misra, Nesime Tatbul, Vikram Nathan, Vasimuddin Md, Tim Kraska

- Learning Multi-dimensional Indexes. SIGMOD 2020. Vikram Nathan*, Jialin Ding*, Mohammad Alizadeh, Tim Kraska

- CDFShop: Exploring and Optimizing Learned Index Structures. SIGMOD 2020 (demo). Ryan Marcus, Emily Zhang, Tim Kraska

- ALEX: An Updatable Adaptive Learned Index. SIGMOD 2020. Jialin Ding, Umar Farooq Minhas, Jia Yu, Chi Wang, Jaeyoung Do, Yinan Li, Hantian Zhang, Badrish Chandramouli, Johannes Gehrke, Donald Kossmann, David Lomet, Tim Kraska

- Benchmarking Learned Indexes. VLDB 2021. Ryan Marcus, Andreas Kipf, Alexander van Renen, Mihail Stoian, Sanchit Misra, Alfons Kemper, Thomas Neumann, Tim Kraska

- Tsunami: A Learned Multi-dimensional Index for Correlated Data and Skewed Workloads. VLDB 2021. Jialin Ding, Vikram Nathan, Mohammad Alizadeh, Tim Kraska

Query Execution

Learning the data distribution can also be used to speed up query execution. In particular, we are applying learned techniques to sorting, scheduling, and joins.

Publications:

- Learning Scheduling Algorithms for Data Processing Clusters. SIGCOMM 2019. Hongzi Mao, Malte Schwarzkopf, Shaileshh Bojja Venkatakrishnan, Zili Meng, Mohammad Alizadeh

- The Case for a Learned Sorting Algorithm. SIGMOD 2020. Ani Kristo*, Kapil Vaidya*, Ugur Cetintemel, Sanchit Misra, Tim Kraska

Query Optimization

Traditional query optimizers are extremely hard to build, maintain, and often yield sub-optimal query plans. The brittleness and complexity of the optimizer makes it a good candidate to be learned. We have introduced the first end-to-end learned query optimizer. We are also exploring learning-based cardinality estimation techniques.

Publications:

- Neo: A Learned Query Optimizer. VLDB 2019. Ryan Marcus, Parimarjan Negi, Hongzi Mao, Chi Zhang, Mohammad Alizadeh, Tim Kraska, Olga Papaemmanouil, Nesime Tatbul

- Cost-Guided Cardinality Estimation: Focus Where it Matters. SMDB 2020. Parimarjan Negi, Ryan Marcus, Hongzi Mao, Nesime Tatbul, Tim Kraska, Mohammad Alizadeh

- Bao: Learning to Steer Query Optimizers. Preprint. Ryan Marcus, Parimarjan Negi, Hongzi Mao, Nesime Tatbul, Mohammad Alizadeh, Tim Kraska

Open Source

We have open-sourced a number of our projects:

- Search on Sorted Data Benchmark (SOSD). https://github.com/learnedsystems/sosd.

- Reference implementation of recursive model index (RMI). https://github.com/learnedsystems/RMI.

- Park: An Open Platform for Learning Augmented Computer Systems. https://github.com/park-project/park.

- Reference implementation of Learned Sort. https://github.com/learnedsystems/LearnedSort.

- ALEX: An Updatable Adaptive Learned Index. https://github.com/microsoft/ALEX.

- Bao: Learning to Steer Query Optimizers. https://github.com/learnedsystems/baoforpostgresql.

Vision

Modern data processing systems are designed to be general-purpose, in that they can handle a wide variety of different schemas, data types and data distributions, and provide efficient access to that data through use of optimizers and cost models. The general-purpose nature of current systems results in code bases that don’t take advantage of the particular specification of a user’s application and data. However, recent results from our research members have shown how learned models over the user’s data distribution and workload pattern can significantly enhance and sometimes entirely replace index structures scheduling algorithms

We are just at the beginning of understanding the potential of using learned models to enhance the traditional algorithms and data structures that form the basis of modern DBMSs, such as B-Trees, Bloom filters, hash maps, sorting algorithms, join algorithms and query optimizers. The core idea is that (1) almost any algorithm and data structure used by a data management system can be improved if something is known about the data distribution and (2) we can synthesize very efficient new algorithms and data structures with embedded models. But first, fundamental research must be done to understand the implications of putting learned models at the core of the fundamental algorithms and data structures of a database system (e.g., the query optimizer).

What do models mean for the worst-case complexity? Is it possible to bound the worst-case complexity through certain models or hybrid approaches? How can we efficiently approximate the empirical cumulative distribution function (CDF) of a data set in the least number of instructions? How can we efficiently synthesize algorithms and data structures and how can we enhance models with traditional algorithm components? In addition, learned data structures may be more vulnerable against adversarial attacks, so we propose to address security concerns.

To answer such questions, we will build a Self-Assembling Database System prototype, called SageDB, an open-source research platform for model-driven data processing engines. More concretely, we will investigate to what extent learned models can improve the performance of various components of a large-scale data processing system, such as:

[(1) Query Optimization, where models can improve cardinality estimation and the cost model, or provide more efficient means to explore the optimization space.

[(2) Data Access, where models can predict the location of a key in a database (i.e., indexing), help to compress the data, or optimize the storage format

[(3) Query execution, where models can help find efficient query schedules or potentially speed up sorting or joins.

[(4) Advanced Analytics, where models can be used to approximately answer queries or speed up learning tasks over the data.

This is a highly inter-disciplinary research project as it requires a deep understanding of (1) fundamental algorithms and data structures, (2) data-centric systems, (3) machine learning and (4) program synthesis/compilers.